Merge file parts/chunks in S3 on receive - PUT object by invoking lambda

Sending files in chunks over the network is most common because it’s easy to apply a retry mechanism over failed parts. On receiving all the parts then the parts or chunks are merged together to create one single file. We all know how frustrating is to see download failing at 99%.

Data chunking is the most common technique used by your wifi to send you data packets. Splitting the data into packets means the data transmission is not as dependent on the availability of the networks on the path. Once the packets are delivered, the sender sends a final confirmation. Say 200 OK!

In a common scenario where you receive files in multiple parts in your S3 Bucket instead of a large bulky file, you might need to merge those file parts in real-time.

The most effective way to do this using AWS S3 Events to invoke the AWS Lambda function to do the merge process over all the files.

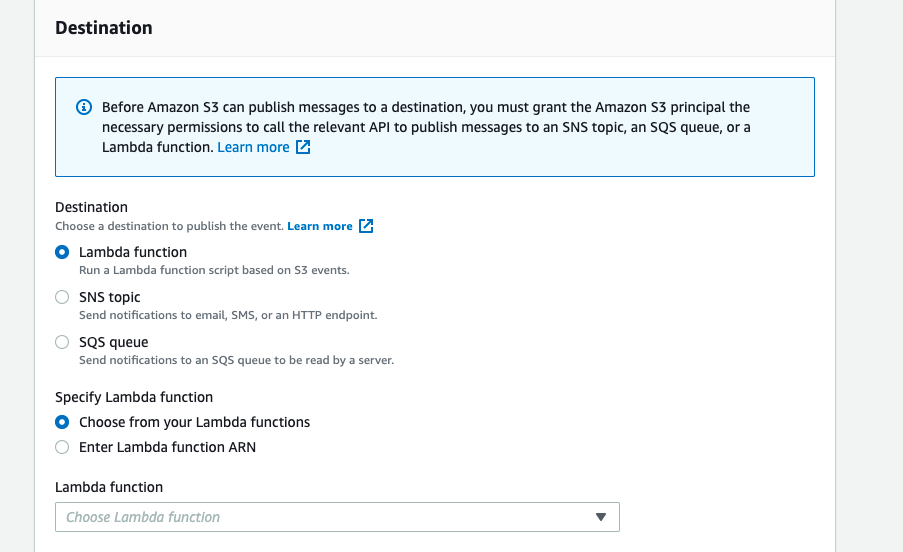

You will need a valid S3 bucket, Lambda, and permissions around it as a Pre-requisite.

Your file coming in parts might be named as -

filename_part1.ext

filename_part2.ext

If any of your systems is generating those files, then use the system to generate a final dummy blank file name as -

filename.final

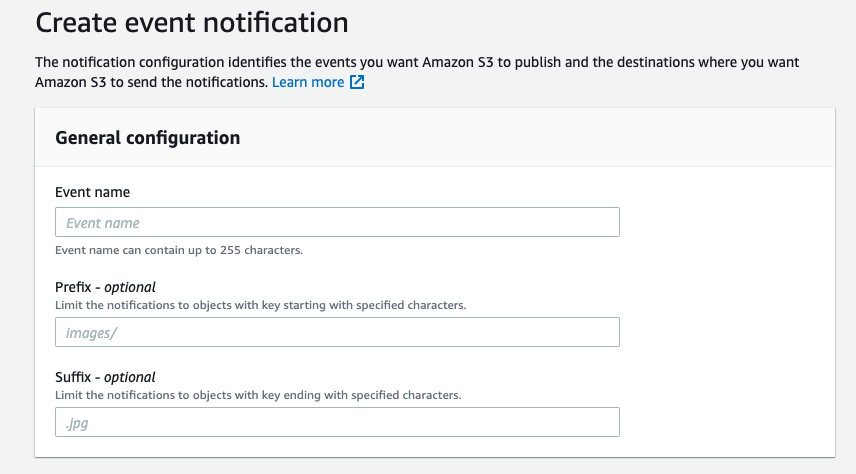

Since in your S3 event trigger you can use a suffix to generate an event, use .final extension to invoke lambda, and process records.

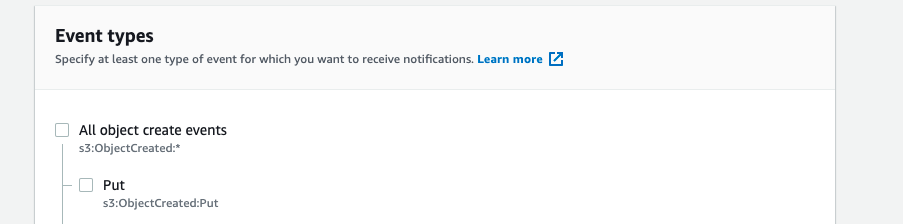

The event type in S3 should be PUT so that every time a file is PUT using PUT object operation with extension *.final

Since your event is set, then you need to invoke the target lambda which will receive the S3Event of the records that were put in the S3 bucket.

The S3 event structure looks like this -

| |

Now further you need to implement the lambda code to process your records.

Share with others on